AWS EC2 Single Instance Deployment

Single AWS EC2 instance with Podman for development, testing, and small-scale deployments

Advanced

2 - 5 hr

- AWS account with access to Bedrock (see below for setup)

- AWS CLI installed and configured

- Pulumi installed locally

- Python 3.11+ for CLI tools

- Basic command-line familiarity

Deploy a TrustGraph development environment on a single AWS EC2 instance with Podman containers and AWS Bedrock integration using Infrastructure as Code.

Overview

This guide walks you through deploying TrustGraph on a single AWS EC2 instance using Pulumi (Infrastructure as Code). The deployment automatically provisions an EC2 instance running Podman containers integrated with AWS Bedrock for LLM services.

Pulumi is an open-source Infrastructure as Code tool that uses general-purpose programming languages (TypeScript/JavaScript in this case) to define cloud infrastructure. Unlike manual deployments, Pulumi provides:

- Reproducible, version-controlled infrastructure

- Testable and retryable deployments

- Automatic resource dependency management

- Simple rollback capabilities

Once deployed, you’ll have a complete TrustGraph stack running on AWS infrastructure with:

- Single EC2 instance (t3.2xlarge, configurable)

- AWS Bedrock integration (Claude 3.5 Haiku)

- Complete monitoring with Grafana and Prometheus

- Web workbench for document processing and Graph RAG

- Secure IAM role-based authentication

Development Deployment Only

This single instance deployment is appropriate for:

- Development and testing

- Experimentation and learning

- Quick prototyping

- Analysis and evaluation

Not recommended for production use - this deployment has no redundancy or resilience. It also may be complex to securely integrate a docker deployment with AWS VPCs for inter-service networking.

For production deployments, consider:

- AWS RKE Deployment - Multi-node Kubernetes cluster

Why AWS EC2 Single Instance for TrustGraph?

AWS EC2 single instance deployment offers unique advantages for development:

- Simple Setup: No complex Kubernetes configuration required

- Direct Access: SSH directly to the instance for debugging

- Cost-Effective: Lower cost than multi-node clusters for testing

- AWS Bedrock Integration: Native access to Claude and other foundation models

- Automatic Credentials: IAM role handles authentication without key management

- Rapid Iteration: Quick deployment and teardown cycles

Ideal for developers, researchers, and teams evaluating TrustGraph capabilities before production deployment.

Getting ready

AWS Account

You’ll need an AWS account with access to AWS Bedrock. If you don’t have one:

- Sign up at https://aws.amazon.com/

- Complete account verification

- Set up billing (new accounts receive free tier benefits)

Enable AWS Bedrock Models

AWS Bedrock requires explicit model access enablement:

- Navigate to the AWS Bedrock Console

- Select your deployment region (e.g.,

us-east-1) - Go to Model access in the left navigation

- Click Manage model access

- Enable access to:

- Anthropic Claude 3.5 Haiku (recommended default)

- Mistral Nemo Instruct (optional alternative)

- Other models as desired

- Click Save changes

Model availability varies by region. See AWS Bedrock model availability.

Install AWS CLI

Install the AWS Command Line Interface:

Linux

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

MacOS

curl "https://awscli.amazonaws.com/AWSCLIV2.pkg" -o "AWSCLIV2.pkg"

sudo installer -pkg AWSCLIV2.pkg -target /

Or via Homebrew:

brew install awscli

Windows

Download the installer from aws.amazon.com/cli/

Verify installation:

aws --version

Configure AWS Authentication

Configure AWS credentials for Pulumi deployment:

aws configure

Provide:

- AWS Access Key ID: Your access key

- AWS Secret Access Key: Your secret key

- Default region: e.g.,

us-east-1 - Default output format:

json

Alternatively, use AWS profiles:

export AWS_PROFILE=your-profile-name

Python

You need Python 3.11 or later installed for the TrustGraph CLI tools.

Check your Python version

python3 --version

If you need to install or upgrade Python, visit python.org.

Pulumi

Install Pulumi on your local machine:

Linux

curl -fsSL https://get.pulumi.com | sh

MacOS

brew install pulumi/tap/pulumi

Windows

Download the installer from pulumi.com.

Verify installation:

pulumi version

Full installation details are at pulumi.com.

Node.js

The Pulumi deployment code uses TypeScript/JavaScript, so you’ll need Node.js installed:

- Download: nodejs.org (LTS version recommended)

- Linux:

sudo apt install nodejs npm(Ubuntu/Debian) orsudo dnf install nodejs(Fedora) - MacOS:

brew install node

Verify installation:

node --version

npm --version

Prepare the deployment

Get the Pulumi code

Clone the TrustGraph AWS EC2 Pulumi repository:

git clone https://github.com/trustgraph-ai/pulumi-trustgraph-ec2.git

cd pulumi-trustgraph-ec2/pulumi

Install dependencies

Install the Node.js dependencies for the Pulumi project:

npm install

Configure AWS region

Set your AWS region for Pulumi:

pulumi config set aws:region us-east-1

Available Bedrock regions include:

us-east-1(N. Virginia)us-west-2(Oregon)eu-central-1(Frankfurt)ap-southeast-1(Singapore)ap-northeast-1(Tokyo)

Refer to AWS Bedrock regions for a complete list.

Configure Pulumi state

You need to tell Pulumi which state to use. You can store this in an S3 bucket, but for experimentation, you can just use local state:

pulumi login --local

When storing secrets in the Pulumi state, pulumi uses a secret passphrase to encrypt secrets. When using Pulumi in a production or shared environment you would have to evaluate the security arrangements around secrets.

We’re just going to set this to the empty string, assuming that no encryption is fine for a development deploy.

export PULUMI_CONFIG_PASSPHRASE=

Create a Pulumi stack

Initialize a new Pulumi stack for your deployment:

pulumi stack init dev

You can use any name instead of dev - this helps you manage multiple deployments (dev, staging, prod, etc.).

Configure the stack

Apply settings for instance type and AWS Bedrock model:

pulumi config set instanceType t3.2xlarge

pulumi config set bedrockModel anthropic.claude-3-5-haiku-20241022-v1:0

Available instance types:

t3.2xlarge- 8 vCPUs, 32 GB RAM (recommended)t3.xlarge- 4 vCPUs, 16 GB RAM (minimum)m5.2xlarge- 8 vCPUs, 32 GB RAM (better performance)m5.4xlarge- 16 vCPUs, 64 GB RAM (high performance)

Available Bedrock models:

anthropic.claude-3-5-haiku-20241022-v1:0(fast, cost-effective)anthropic.claude-3-5-sonnet-20241022-v2:0(advanced reasoning)mistral.mistral-nemo-instruct-2407-v1:0(alternative)

Refer to the repository’s README for additional configuration options.

Deploy with Pulumi

Preview the deployment

Before deploying, preview what Pulumi will create:

pulumi preview

This shows all the resources that will be created:

- EC2 instance with specified type

- IAM role with Bedrock permissions

- Security group for SSH access

- EBS volumes for storage

- SSH key pair for instance access

- Elastic IP for static addressing

- User data script for automatic setup

Review the output to ensure everything looks correct.

Deploy the infrastructure

Deploy the complete TrustGraph stack:

pulumi up

Pulumi will ask for confirmation before proceeding. Type yes to continue.

The deployment typically takes 10 - 15 minutes and progresses through these stages:

- Creating EC2 infrastructure (2-3 minutes)

- Provisions EC2 instance

- Creates IAM role and instance profile

- Sets up security groups

- Assigns Elastic IP

- Configuring instance (5-8 minutes)

- Installs Podman and dependencies

- Downloads TrustGraph containers

- Configures Podman Compose

- Sets up AWS Bedrock integration

- Starting TrustGraph (3-5 minutes)

- Starts all containers

- Initializes services

- Runs health checks

You’ll see output showing the creation progress of all resources.

Retrieve deployment outputs

After deployment completes, Pulumi will display important outputs:

pulumi stack output

Key outputs:

instanceIp- Public IP address of the EC2 instancesshCommand- Command to SSH to the instanceprivateKey- SSH private key (saved tossh-private.key)

The SSH private key is automatically saved to ssh-private.key in the current directory.

Set SSH key permissions

Set correct permissions on the SSH private key:

chmod 600 ssh-private.key

Access the instance

SSH access with port forwarding

Access the instance with SSH port forwarding for web services:

ssh -L 3000:localhost:3000 -L 8888:localhost:8888 -L 8088:localhost:8088 \

-i ssh-private.key ubuntu@$(pulumi stack output instanceIp)

This creates port forwards for:

- Port 3000 - Grafana monitoring dashboard

- Port 8888 - TrustGraph web workbench

- Port 8088 - TrustGraph API gateway

Verify container status

Once connected via SSH, verify all containers are running:

sudo podman ps -a

All containers should show Up status. If some containers are still starting, wait 1-2 minutes and check again.

Install CLI tools

Now install the TrustGraph command-line tools. These tools help you interact with TrustGraph, load documents, and verify the system.

Create a Python virtual environment and install the CLI:

python3 -m venv env

source env/bin/activate # On Windows: env\Scripts\activate

pip install trustgraph-cli

Startup period

It can take 2-3 minutes for all services to stabilize after deployment. Services like Pulsar and Cassandra need time to initialize properly.

Verify system health

tg-verify-system-status

If everything is working, the output looks something like this:

============================================================

TrustGraph System Status Verification

============================================================

Phase 1: Infrastructure

------------------------------------------------------------

[00:00] ⏳ Checking Pulsar...

[00:03] ⏳ Checking Pulsar... (attempt 2)

[00:03] ✓ Pulsar: Pulsar healthy (0 cluster(s))

[00:03] ⏳ Checking API Gateway...

[00:03] ✓ API Gateway: API Gateway is responding

Phase 2: Core Services

------------------------------------------------------------

[00:03] ⏳ Checking Processors...

[00:03] ✓ Processors: Found 34 processors (≥ 15)

[00:03] ⏳ Checking Flow Classes...

[00:06] ⏳ Checking Flow Classes... (attempt 2)

[00:09] ⏳ Checking Flow Classes... (attempt 3)

[00:22] ⏳ Checking Flow Classes... (attempt 4)

[00:35] ⏳ Checking Flow Classes... (attempt 5)

[00:38] ⏳ Checking Flow Classes... (attempt 6)

[00:38] ✓ Flow Classes: Found 9 flow class(es)

[00:38] ⏳ Checking Flows...

[00:38] ✓ Flows: Flow manager responding (1 flow(s))

[00:38] ⏳ Checking Prompts...

[00:38] ✓ Prompts: Found 16 prompt(s)

Phase 3: Data Services

------------------------------------------------------------

[00:38] ⏳ Checking Library...

[00:38] ✓ Library: Library responding (0 document(s))

Phase 4: User Interface

------------------------------------------------------------

[00:38] ⏳ Checking Workbench UI...

[00:38] ✓ Workbench UI: Workbench UI is responding

============================================================

Summary

============================================================

Checks passed: 8/8

Checks failed: 0/8

Total time: 00:38

✓ System is healthy!

The Checks failed line is the most interesting and is hopefully zero. If you are having issues, look at the troubleshooting section later.

If everything appears to be working, the following parts of the deployment guide are a whistle-stop tour through various parts of the system.

Test LLM access

Test that Scaleway Gen AI integration is working by invoking the LLM through the gateway:

tg-invoke-llm 'Be helpful' 'What is 2 + 2?'

You should see output like:

2 + 2 = 4

This confirms that TrustGraph can successfully communicate with Scaleway’s Generative AI service.

This confirms that TrustGraph can successfully communicate with AWS Bedrock service.

Load sample documents

Load a small set of sample documents into the library for testing:

tg-load-sample-documents

This downloads documents from the internet and caches them locally. The download can take a little time to run.

Workbench

TrustGraph includes a web interface for document processing and Graph RAG.

Access the TrustGraph workbench at http://localhost:8888 (requires port-forwarding to be running).

By default, there are no credentials.

You should be able to navigate to the Flows tab and see a single default flow running. The guide will return to the workbench to load a document.

Monitoring dashboard

Access Grafana monitoring at http://localhost:3000 (requires port-forwarding to be running).

Default credentials:

- Username:

admin - Password:

admin

All TrustGraph components collect metrics using Prometheus and make these available using this Grafana workbench. The Grafana deployment is configured with 2 dashboards:

- Overview metrics dashboard: Shows processing metrics

- Logs dashboard: Shows collated TrustGraph container logs

For a newly launched system, the metrics won’t be particularly interesting yet.

Check the LLM is working

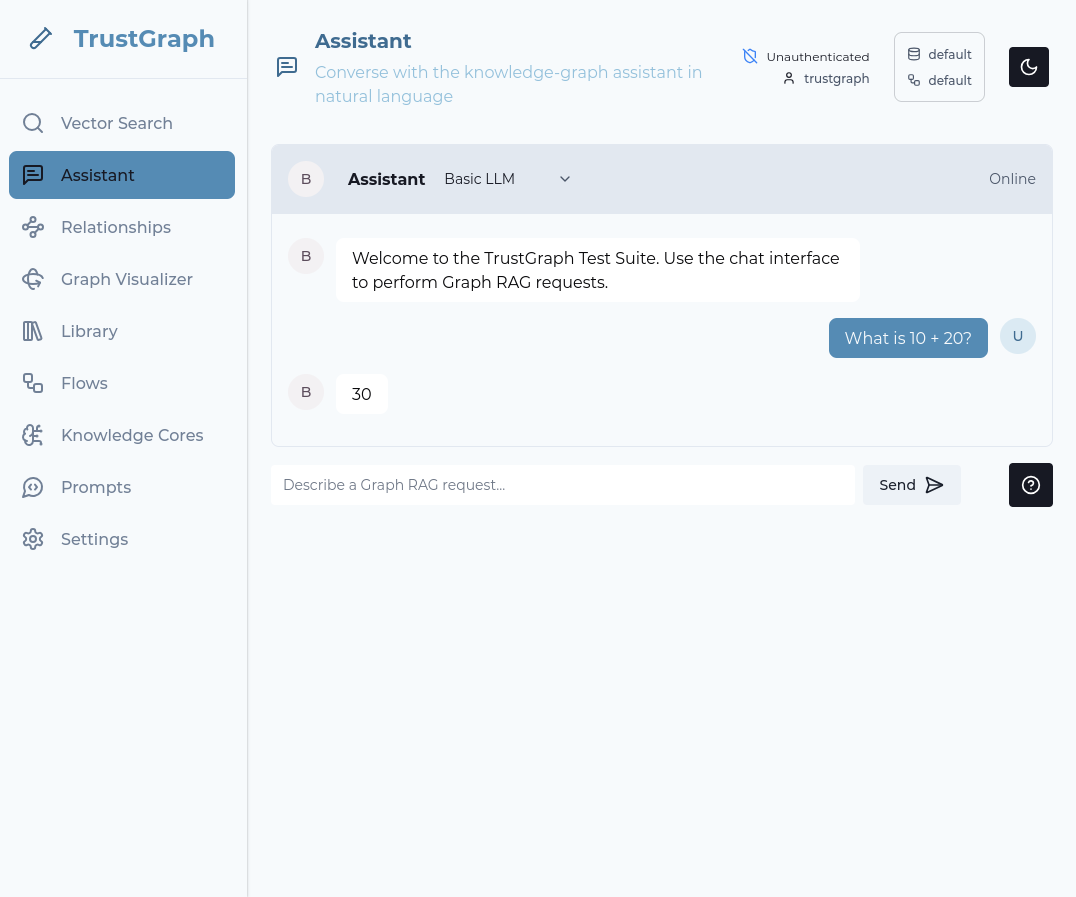

Back in the workbench, select the Assistant tab.

In the top line next to the Assistant word, change the mode to Basic LLM.

Enter a question in the prompt box at the bottom of the tab and press Send. If everything works, after a short period you should see a response to your query.

If LLM interactions are not working, check the Grafana logs dashboard for errors in the text-completion service.

Working with a document

Load a document

Back in the workbench:

- Navigate to the Library page

- In the upper right-hand corner, there is a dark/light mode widget. To its left is a selector widget. Ensure the top and bottom lines say “default”. If not, click on the widget and change.

- On the library tab, select a document (e.g., “Beyond State Vigilance”)

- Click Submit on the action bar

- Choose a processing flow (use Default processing flow)

- Click Submit to process

Beyond State Vigilance is a relatively short document, so it’s a good one to start with.

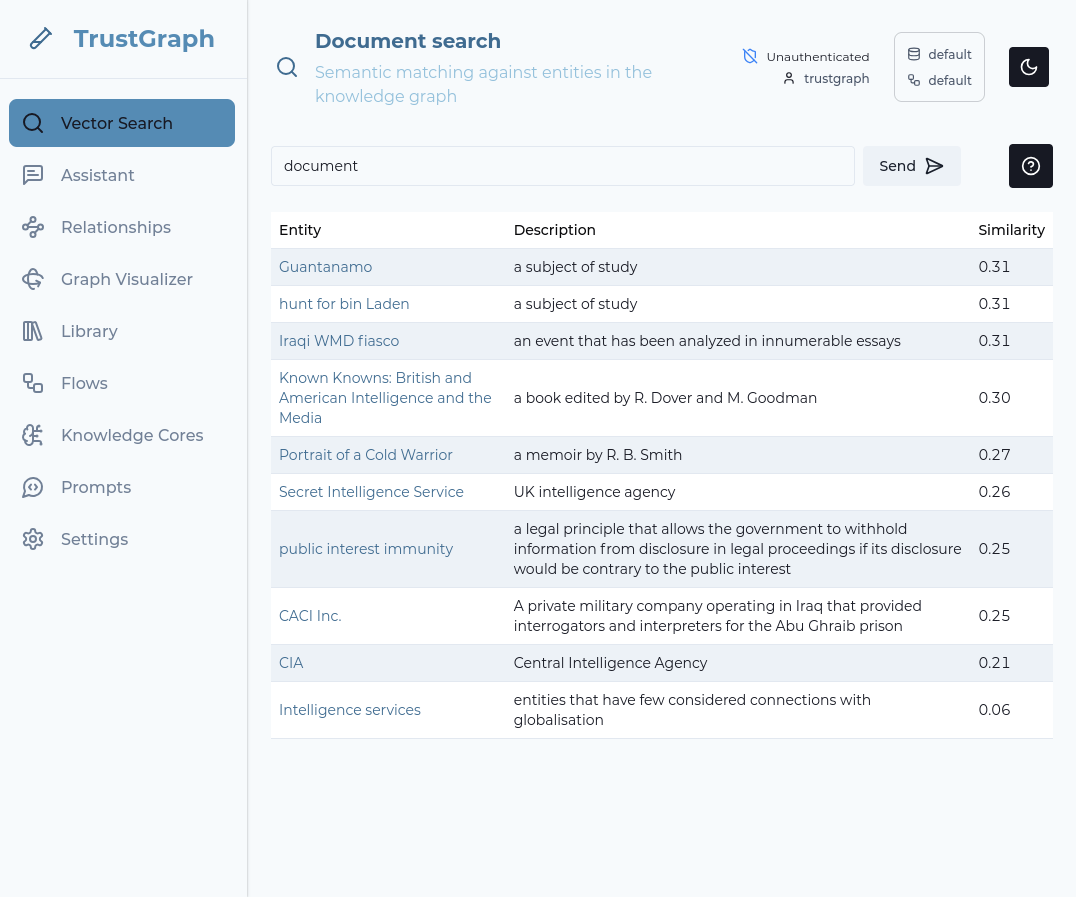

Use Vector search

Select the Vector Search tab. Enter a string (e.g., “document”) in the search bar and hit RETURN. The search term doesn’t matter a great deal. If information has started to load, you should see some search results.

The vector search attempts to find up to 10 terms which are the closest matches for your search term. It does this even if the search terms are not a strong match, so this is a simple way to observe whether data has loaded.

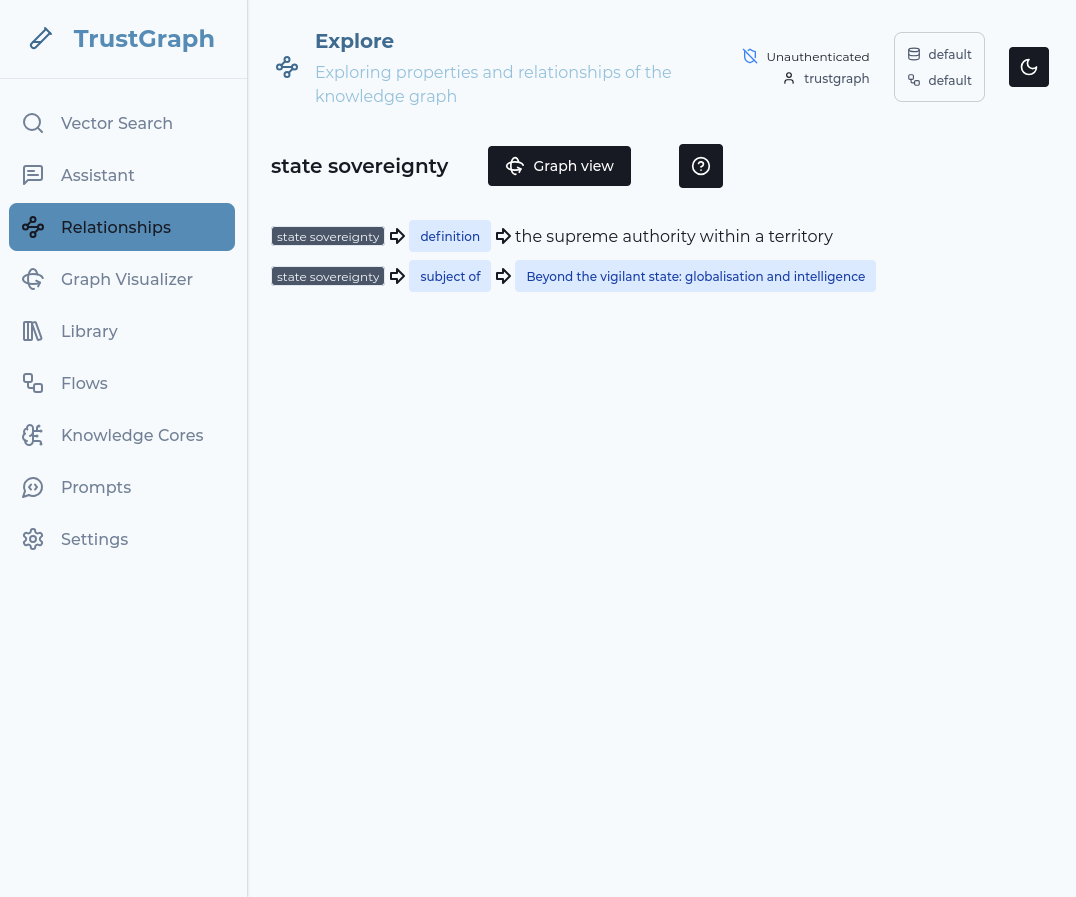

Look at knowledge graph

Click on one of the Vector Search result terms on the left-hand side. This shows relationships in the graph from the knowledge graph linking to that term.

You can then click on the Graph view button to go to a 3D view of the discovered relationships.

Query with Graph RAG

- Navigate to Assistant tab

- Change the Assistant mode to GraphRAG

- Enter your question (e.g., “What is this document about?”)

- You will see the answer to your question after a short period

Troubleshooting

Deployment Issues

Pulumi deployment fails

Diagnosis:

Check the Pulumi error output for specific failure messages. Common issues include:

# View detailed error information

pulumi stack --show-urns

pulumi logs

Resolution:

- Authentication errors: Verify AWS credentials are configured (

aws configureorAWS_PROFILE) - Bedrock access denied: Ensure Bedrock model access is enabled in your region

- Quota limits: Check your AWS account hasn’t hit EC2 instance quotas

- Region availability: Verify the instance type is available in your selected region

- Permissions: Ensure your AWS user/role has EC2, IAM, and Bedrock permissions

Instance launches but containers don't start

Diagnosis:

SSH to the instance and check container status:

ssh -i ssh-private.key ubuntu@$(pulumi stack output instanceIp)

sudo podman ps -a

sudo journalctl -u podman-compose -n 100

Resolution:

- Container pull failures: Check internet connectivity and container registry access

- Resource constraints: Verify instance type has sufficient memory (minimum t3.xlarge)

- Podman configuration issues: Review

/var/log/cloud-init-output.logfor setup errors - Port conflicts: Ensure no other services are using required ports

AWS Bedrock integration not working

Diagnosis:

Test Bedrock connectivity from the instance:

# SSH to instance

ssh -i ssh-private.key ubuntu@$(pulumi stack output instanceIp)

# Test LLM

tg-invoke-llm '' 'What is 2+2'

Check the text-completion container logs:

sudo podman logs $(sudo podman ps -q -f name=text-completion)

Resolution:

- Verify Bedrock model access is enabled in AWS Console

- Check IAM role has

bedrock:InvokeModelpermission - Ensure the model ID is correct for your region

- Verify instance profile is attached:

aws sts get-caller-identity - Check Bedrock service quotas haven’t been exceeded

SSH connection fails

Diagnosis:

Verify instance is running and accessible:

# Check instance state

aws ec2 describe-instances --filters "Name=tag:Name,Values=trustgraph-ec2"

# Verify security group allows SSH

aws ec2 describe-security-groups --group-ids $(pulumi stack output securityGroupId)

Resolution:

- Verify SSH key permissions:

chmod 600 ssh-private.key - Check your IP is allowed in security group (may need to update)

- Ensure instance has public IP assigned

- Verify instance state is

running - Check CloudWatch Logs for instance startup issues

Service Failure

Containers in restart loop

Diagnosis:

# Find restarting containers

sudo podman ps -a | grep Restarting

# View logs from container

sudo podman logs <container-name>

Resolution:

Check the logs to identify why the container is restarting. Common causes:

- Application errors (configuration issues)

- Missing dependencies (ensure all required containers are running)

- Incorrect environment variables

- Resource limits too low (increase instance size)

- AWS credentials not properly available via IAM role

Service not responding

Diagnosis:

Check service status:

sudo podman ps

sudo podman logs <container-name>

Resolution:

- Verify the container is running

- Check container logs for errors

- Ensure port-forwarding is active in your SSH session

- Use

tg-verify-system-statusto check overall system health - Restart specific container:

sudo podman restart <container-name>

AWS-Specific Issues

Instance out of memory

Diagnosis:

Check memory usage on the instance:

free -h

top

Resolution:

- Upgrade to larger instance type (e.g., t3.2xlarge → m5.2xlarge)

- Update Pulumi configuration:

pulumi config set instanceType m5.2xlarge - Redeploy:

pulumi up

Bedrock throttling errors

Diagnosis:

Error messages about Bedrock rate limits or throttling.

Resolution:

- Check Bedrock quotas in AWS Service Quotas console

- Request quota increases if needed

- Implement rate limiting in your application

- Switch to a different Bedrock model with higher quotas

Shutting down

Clean shutdown

When you’re finished with your TrustGraph deployment, clean up all resources:

pulumi destroy

Pulumi will show you all the resources that will be deleted and ask for confirmation. Type yes to proceed.

The destruction process typically takes 3-5 minutes and removes:

- EC2 instance

- IAM roles and instance profiles

- Security groups

- Elastic IP

- EBS volumes

- SSH key pair

Cost Warning: AWS charges for running EC2 instances and EBS storage. Make sure to destroy your deployment when you’re not using it to avoid unnecessary costs. EC2 charges accrue hourly.

Verify cleanup

After pulumi destroy completes, verify all resources are removed:

# Check Pulumi stack status

pulumi stack

# Verify no resources remain

pulumi stack --show-urns

# Check AWS for remaining resources

aws ec2 describe-instances --filters "Name=tag:Name,Values=trustgraph-ec2"

Delete the Pulumi stack

If you’re completely done with this deployment, you can remove the Pulumi stack:

pulumi stack rm dev

This removes the stack’s state but doesn’t affect any cloud resources (use pulumi destroy first).

Cost Optimization

Monitor Costs

Keep track of your AWS spending:

- Navigate to Billing in AWS Console

- Use Cost Explorer to view EC2 and Bedrock costs

- Set up billing alerts for unexpected charges

Cost-Saving Tips

- Stop instance when not in use:

aws ec2 stop-instances --instance-ids <instance-id> - Use Spot Instances: Configure Pulumi for spot instances (60-90% cheaper)

- Right-size instance: Start with t3.xlarge and upgrade only if needed

- Reserved Instances: For long-term use, purchase reserved capacity

- Bedrock costs: Pay only for API calls, no base charge

- Delete snapshots: Clean up any EBS snapshots you don’t need

Example cost estimates (us-east-1):

- t3.2xlarge instance: ~$0.33/hour (~$240/month if running continuously)

- EBS storage (100GB): ~$10/month

- Bedrock API calls: ~$0.25-$1.00 per 1M input tokens (varies by model)

- Total estimated: ~$250-300/month for continuous operation

Cost reduction: Stop the instance when not in use to pay only for storage (~$10/month).

Instance Management

Starting a stopped instance

If you stopped your instance to save costs, restart it:

# Get instance ID from Pulumi

INSTANCE_ID=$(pulumi stack output instanceId)

# Start instance

aws ec2 start-instances --instance-ids $INSTANCE_ID

# Wait for running state

aws ec2 wait instance-running --instance-ids $INSTANCE_ID

# Get new IP (if not using Elastic IP)

aws ec2 describe-instances --instance-ids $INSTANCE_ID \

--query 'Reservations[0].Instances[0].PublicIpAddress'

Stopping the instance

Stop the instance without destroying it:

INSTANCE_ID=$(pulumi stack output instanceId)

aws ec2 stop-instances --instance-ids $INSTANCE_ID

You’ll only pay for EBS storage while stopped (~$10/month vs ~$240/month running).

Security Considerations

SSH Access

The deployment creates a security group allowing SSH access from your current IP address. To update allowed IPs:

# Update security group via Pulumi

pulumi config set sshAllowedCIDR "203.0.113.0/24"

pulumi up

IAM Role Permissions

The EC2 instance uses an IAM role with Bedrock permissions. The role follows least-privilege principles:

{

"Effect": "Allow",

"Action": [

"bedrock:InvokeModel",

"bedrock:InvokeModelWithResponseStream"

],

"Resource": "*"

}

Network Security

- No inbound traffic except SSH (port 22)

- Outbound traffic allowed for container registries and AWS services

- Service access via SSH port forwarding (no direct exposure)

Next Steps

Now that you have TrustGraph running on AWS EC2:

- Guides: See Guides for things you can do with your running TrustGraph

- Experiment with models: Try different Bedrock models (Claude Sonnet, Mistral, etc.)

- Scale up: Upgrade instance type for better performance

- Production migration: When ready, migrate to AWS RKE deployment for production use

- Customize containers: Modify Podman Compose configuration for your needs

- Integration: Connect to other AWS services (S3, DynamoDB, etc.)

Additional Resources

- TrustGraph AWS EC2 Pulumi Repository - Full source code and configuration

- AWS Bedrock Documentation - Learn more about AWS foundation models

- Podman Documentation - Container management reference

- AWS EC2 Best Practices - AWS recommendations