Microsoft Azure AKS Deployment

Deploy on Azure Kubernetes Service with AI Foundry and dual AI model support

Advanced

2 - 4 hr

- Azure account with active subscription (see below for setup)

- Azure CLI installed and configured

- Pulumi installed locally

- kubectl command-line tool

- Python 3.11+ for CLI tools

- Basic command-line and Kubernetes familiarity

Deploy a production-ready TrustGraph environment on Azure Kubernetes Service with AI Foundry and dual AI model support using Infrastructure as Code.

Overview

This guide walks you through deploying TrustGraph on Microsoft Azure’s Kubernetes Service (AKS) using Pulumi (Infrastructure as Code). The deployment automatically provisions a production-ready Kubernetes cluster integrated with Azure’s AI services including AI Foundry and Cognitive Services.

Pulumi is an open-source Infrastructure as Code tool that uses general-purpose programming languages (TypeScript/JavaScript in this case) to define cloud infrastructure. Unlike manual deployments, Pulumi provides:

- Reproducible, version-controlled infrastructure

- Testable and retryable deployments

- Automatic resource dependency management

- Simple rollback capabilities

Once deployed, you’ll have a complete TrustGraph stack running on Azure infrastructure with:

- Azure Kubernetes Service (AKS) cluster (2-node pool, configurable)

- Azure AI Foundry integration with Phi-4 model

- Azure Cognitive Services with OpenAI GPT-4o-mini

- Complete monitoring with Grafana and Prometheus

- Web workbench for document processing and Graph RAG

- Secure secrets management with Azure Key Vault

Why Microsoft Azure for TrustGraph?

Azure offers unique advantages for enterprise organizations:

- Dual AI Models: Choose between Azure AI Foundry (Phi-4) and OpenAI (GPT-4o-mini)

- Enterprise Integration: Native integration with Microsoft 365, Active Directory, and enterprise services

- Hybrid Cloud: Seamless hybrid cloud capabilities with Azure Arc

- Compliance: Extensive compliance certifications (ISO, SOC, HIPAA, FedRAMP, etc.)

- Global Scale: 60+ regions worldwide with Microsoft’s global network

Ideal for organizations in the Microsoft ecosystem requiring enterprise-grade AI and compliance.

Getting ready

Azure Account

You’ll need an Azure account with an active subscription. If you don’t have one:

- Sign up at https://azure.microsoft.com/

- Complete account verification

- Create or select a subscription

- New users receive $200 in free credits for 30 days

Create a Resource Group (Optional)

While Pulumi will create a resource group, you may want to pre-create one for organizational purposes:

az group create --name trustgraph-rg --location eastus

Install Azure CLI

Install the Azure command-line tool:

Linux

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

MacOS

brew update && brew install azure-cli

Windows

Download the installer from aka.ms/installazurecliwindows

Verify installation:

az --version

Configure Azure Authentication

Authenticate with your Azure account:

az login

This will open a browser for authentication. After successful login, set your default subscription:

az account set --subscription "YOUR_SUBSCRIPTION_ID"

You can list your subscriptions with:

az account list --output table

Register Required Resource Providers

Ensure necessary Azure resource providers are registered:

az provider register --namespace Microsoft.ContainerService

az provider register --namespace Microsoft.Compute

az provider register --namespace Microsoft.Network

az provider register --namespace Microsoft.Storage

az provider register --namespace Microsoft.KeyVault

az provider register --namespace Microsoft.CognitiveServices

Python

You need Python 3.11 or later installed for the TrustGraph CLI tools.

Check your Python version

python3 --version

If you need to install or upgrade Python, visit python.org.

Pulumi

Install Pulumi on your local machine:

Linux

curl -fsSL https://get.pulumi.com | sh

MacOS

brew install pulumi/tap/pulumi

Windows

Download the installer from pulumi.com.

Verify installation:

pulumi version

Full installation details are at pulumi.com.

kubectl

Install kubectl to manage your Kubernetes cluster:

- Linux: Install kubectl on Linux

- MacOS:

brew install kubectl - Windows: Install kubectl on Windows

Verify installation:

kubectl version --client

Node.js

The Pulumi deployment code uses TypeScript/JavaScript, so you’ll need Node.js installed:

- Download: nodejs.org (LTS version recommended)

- Linux:

sudo apt install nodejs npm(Ubuntu/Debian) orsudo dnf install nodejs(Fedora) - MacOS:

brew install node

Verify installation:

node --version

npm --version

Azure AI Services Access

The deployment supports two AI configurations:

Option 1: Azure AI Foundry (Machine Learning)

- Model: Phi-4 (serverless endpoint)

- Configuration: Uses

resources.yaml.mls - Requirements: Azure subscription with AI Foundry access

Option 2: Azure Cognitive Services (OpenAI)

- Model: GPT-4o-mini

- Configuration: Uses

resources.yaml.cs - Requirements: Azure subscription with Cognitive Services access

You’ll choose your AI model during deployment preparation.

Prepare the deployment

Get the Pulumi code

Clone the TrustGraph Azure Pulumi repository:

git clone https://github.com/trustgraph-ai/pulumi-trustgraph-azure.git

cd pulumi-trustgraph-azure/pulumi

Choose your AI model configuration

Select which AI model to use:

For Azure AI Foundry (Phi-4):

cp resources.yaml.mls resources.yaml

For Azure Cognitive Services (OpenAI GPT-4o-mini):

cp resources.yaml.cs resources.yaml

You can switch between configurations by copying the appropriate template file to

resources.yamland re-deploying.

Install dependencies

Install the Node.js dependencies for the Pulumi project:

npm install

Configure Pulumi state

You need to tell Pulumi which state to use. You can store this in an S3 bucket, but for experimentation, you can just use local state:

pulumi login --local

When storing secrets in the Pulumi state, pulumi uses a secret passphrase to encrypt secrets. When using Pulumi in a production or shared environment you would have to evaluate the security arrangements around secrets.

We’re just going to set this to the empty string, assuming that no encryption is fine for a development deploy.

export PULUMI_CONFIG_PASSPHRASE=

Create a Pulumi stack

Initialize a new Pulumi stack for your deployment:

pulumi stack init dev

You can use any name instead of dev - this helps you manage multiple deployments (dev, staging, prod, etc.).

Configure the stack

Apply settings for Azure region and environment:

pulumi config set azure-native:location eastus

pulumi config set environment dev

Available Azure regions include:

eastus(East US)westus2(West US 2)northeurope(North Europe)westeurope(West Europe)uksouth(UK South)southeastasia(Southeast Asia)australiaeast(Australia East)

Refer to Azure Regions for a complete list.

Configure AI model settings

For Azure AI Foundry (Phi-4):

pulumi config set aiEndpointModel "azureml://registries/azureml/models/Phi-4"

For Azure Cognitive Services (OpenAI):

pulumi config set openaiModel gpt-4o-mini

pulumi config set openaiVersion "2024-07-18"

pulumi config set contentFiltering Microsoft.DefaultV2

Refer to the repository’s README for additional configuration options and model choices.

Deploy with Pulumi

Preview the deployment

Before deploying, preview what Pulumi will create:

pulumi preview

This shows all the resources that will be created:

- Resource group for all TrustGraph resources

- Azure Identity service principal

- AKS Kubernetes cluster

- Node pool with specified VM sizes

- Azure Key Vault for secrets

- Storage account for AI services

- Azure AI Foundry (AI hub, workspace, serverless endpoints) OR

- Cognitive Services (OpenAI deployment)

- Kubernetes secrets for Azure credentials

- TrustGraph deployments, services, and config maps

Review the output to ensure everything looks correct.

Deploy the infrastructure

Deploy the complete TrustGraph stack:

pulumi up

Pulumi will ask for confirmation before proceeding. Type yes to continue.

The deployment typically takes 15 - 25 minutes and progresses through these stages:

- Creating Azure resources (8-12 minutes)

- Creates resource group

- Sets up service principal

- Provisions Key Vault and Storage Account

- Creating AKS cluster (8-10 minutes)

- Provisions AKS cluster

- Creates node pool

- Configures networking

- Configuring AI services (2-3 minutes)

- Sets up Azure AI Foundry (Phi-4) OR Cognitive Services (OpenAI)

- Creates AI endpoints

- Configures authentication

- Deploying TrustGraph (4-6 minutes)

- Applies Kubernetes manifests

- Deploys all TrustGraph services

- Starts pods and initializes services

You’ll see output showing the creation progress of all resources.

Configure and verify kubectl access

After deployment completes, configure kubectl to access your AKS cluster:

az aks get-credentials --resource-group trustgraph-rg --name trustgraph-aks

Verify access:

kubectl get nodes

You should see your AKS nodes listed as Ready.

Check pod status

Verify that all pods are running:

kubectl -n trustgraph get pods

You should see output similar to this (pod names will have different random suffixes):

NAME READY STATUS RESTARTS AGE

agent-manager-74fbb8b64-nzlwb 1/1 Running 0 5m

api-gateway-b6848c6bb-nqtdm 1/1 Running 0 5m

cassandra-6765fff974-pbh65 1/1 Running 0 5m

pulsar-d85499879-x92qv 1/1 Running 0 5m

text-completion-58ccf95586-6gkff 1/1 Running 0 5m

workbench-ui-5fc6d59899-8rczf 1/1 Running 0 5m

...

All pods should show Running status. Some init pods (names ending in -init) may fail or be shown Completed status - this is normal, their job is to initialise cluster resources and then exit.

Access services via port-forwarding

Since the Kubernetes cluster is running on Scaleway, you’ll need to set up port-forwarding to access TrustGraph services from your local machine.

Open three separate terminal windows and run these commands (keep them running):

Terminal 1 - API Gateway:

export KUBECONFIG=$(pwd)/kubeconfig.yaml

kubectl -n trustgraph port-forward svc/api-gateway 8088:8088

Terminal 2 - Workbench UI:

export KUBECONFIG=$(pwd)/kubeconfig.yaml

kubectl -n trustgraph port-forward svc/workbench-ui 8888:8888

Terminal 3 - Grafana:

export KUBECONFIG=$(pwd)/kubeconfig.yaml

kubectl -n trustgraph port-forward svc/grafana 3000:3000

With these port-forwards running, you can access:

- TrustGraph API: http://localhost:8088

- Web Workbench: http://localhost:8888

- Grafana Monitoring: http://localhost:3000

Keep these terminal windows open while you’re working with TrustGraph. If you close them, you’ll lose access to the services.

Install CLI tools

Now install the TrustGraph command-line tools. These tools help you interact with TrustGraph, load documents, and verify the system.

Create a Python virtual environment and install the CLI:

python3 -m venv env

source env/bin/activate # On Windows: env\Scripts\activate

pip install trustgraph-cli

Startup period

It can take 2-3 minutes for all services to stabilize after deployment. Services like Pulsar and Cassandra need time to initialize properly.

Verify system health

tg-verify-system-status

If everything is working, the output looks something like this:

============================================================

TrustGraph System Status Verification

============================================================

Phase 1: Infrastructure

------------------------------------------------------------

[00:00] ⏳ Checking Pulsar...

[00:03] ⏳ Checking Pulsar... (attempt 2)

[00:03] ✓ Pulsar: Pulsar healthy (0 cluster(s))

[00:03] ⏳ Checking API Gateway...

[00:03] ✓ API Gateway: API Gateway is responding

Phase 2: Core Services

------------------------------------------------------------

[00:03] ⏳ Checking Processors...

[00:03] ✓ Processors: Found 34 processors (≥ 15)

[00:03] ⏳ Checking Flow Classes...

[00:06] ⏳ Checking Flow Classes... (attempt 2)

[00:09] ⏳ Checking Flow Classes... (attempt 3)

[00:22] ⏳ Checking Flow Classes... (attempt 4)

[00:35] ⏳ Checking Flow Classes... (attempt 5)

[00:38] ⏳ Checking Flow Classes... (attempt 6)

[00:38] ✓ Flow Classes: Found 9 flow class(es)

[00:38] ⏳ Checking Flows...

[00:38] ✓ Flows: Flow manager responding (1 flow(s))

[00:38] ⏳ Checking Prompts...

[00:38] ✓ Prompts: Found 16 prompt(s)

Phase 3: Data Services

------------------------------------------------------------

[00:38] ⏳ Checking Library...

[00:38] ✓ Library: Library responding (0 document(s))

Phase 4: User Interface

------------------------------------------------------------

[00:38] ⏳ Checking Workbench UI...

[00:38] ✓ Workbench UI: Workbench UI is responding

============================================================

Summary

============================================================

Checks passed: 8/8

Checks failed: 0/8

Total time: 00:38

✓ System is healthy!

The Checks failed line is the most interesting and is hopefully zero. If you are having issues, look at the troubleshooting section later.

If everything appears to be working, the following parts of the deployment guide are a whistle-stop tour through various parts of the system.

Test LLM access

Test that Azure AI integration is working by invoking the LLM through the gateway:

tg-invoke-llm 'Be helpful' 'What is 2 + 2?'

You should see output like:

2 + 2 = 4

This confirms that TrustGraph can successfully communicate with your chosen Azure AI service (AI Foundry or Cognitive Services).

Load sample documents

Load a small set of sample documents into the library for testing:

tg-load-sample-documents

This downloads documents from the internet and caches them locally. The download can take a little time to run.

Workbench

TrustGraph includes a web interface for document processing and Graph RAG.

Access the TrustGraph workbench at http://localhost:8888 (requires port-forwarding to be running).

By default, there are no credentials.

You should be able to navigate to the Flows tab and see a single default flow running. The guide will return to the workbench to load a document.

Monitoring dashboard

Access Grafana monitoring at http://localhost:3000 (requires port-forwarding to be running).

Default credentials:

- Username:

admin - Password:

admin

All TrustGraph components collect metrics using Prometheus and make these available using this Grafana workbench. The Grafana deployment is configured with 2 dashboards:

- Overview metrics dashboard: Shows processing metrics

- Logs dashboard: Shows collated TrustGraph container logs

For a newly launched system, the metrics won’t be particularly interesting yet.

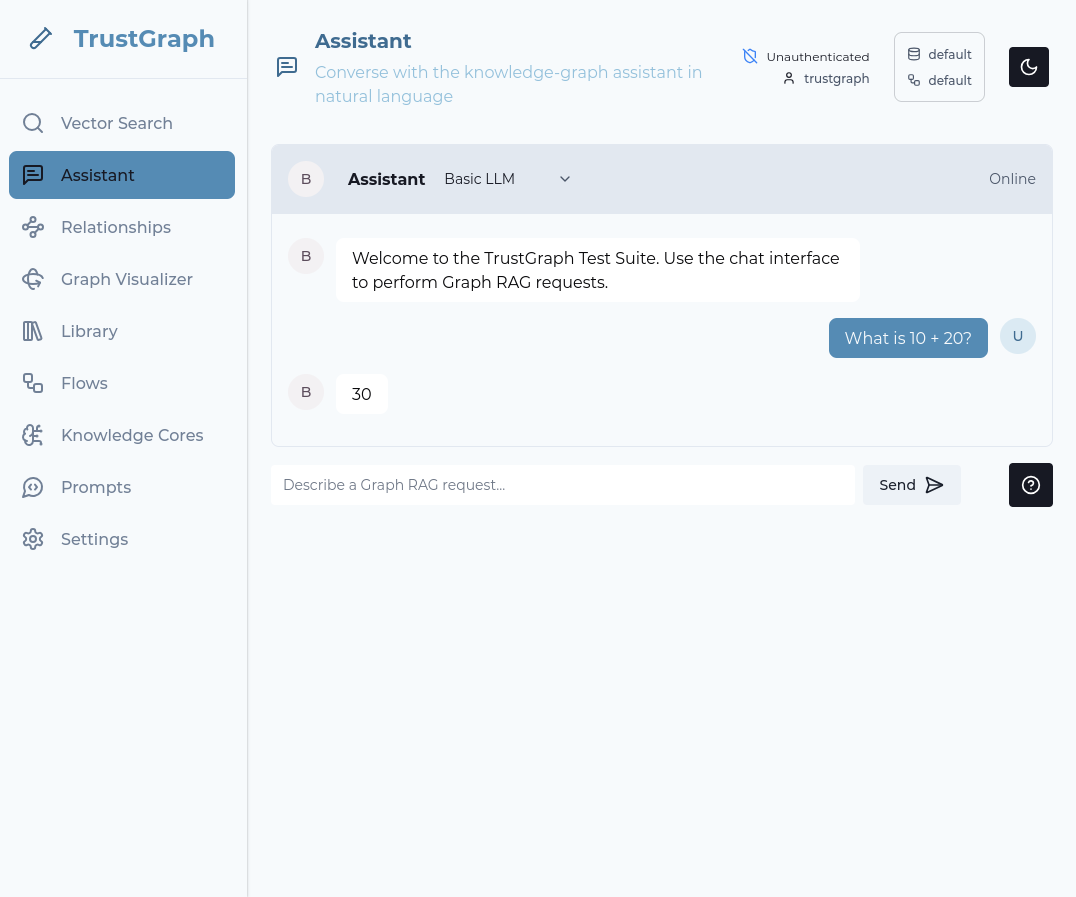

Check the LLM is working

Back in the workbench, select the Assistant tab.

In the top line next to the Assistant word, change the mode to Basic LLM.

Enter a question in the prompt box at the bottom of the tab and press Send. If everything works, after a short period you should see a response to your query.

If LLM interactions are not working, check the Grafana logs dashboard for errors in the text-completion service.

Working with a document

Load a document

Back in the workbench:

- Navigate to the Library page

- In the upper right-hand corner, there is a dark/light mode widget. To its left is a selector widget. Ensure the top and bottom lines say “default”. If not, click on the widget and change.

- On the library tab, select a document (e.g., “Beyond State Vigilance”)

- Click Submit on the action bar

- Choose a processing flow (use Default processing flow)

- Click Submit to process

Beyond State Vigilance is a relatively short document, so it’s a good one to start with.

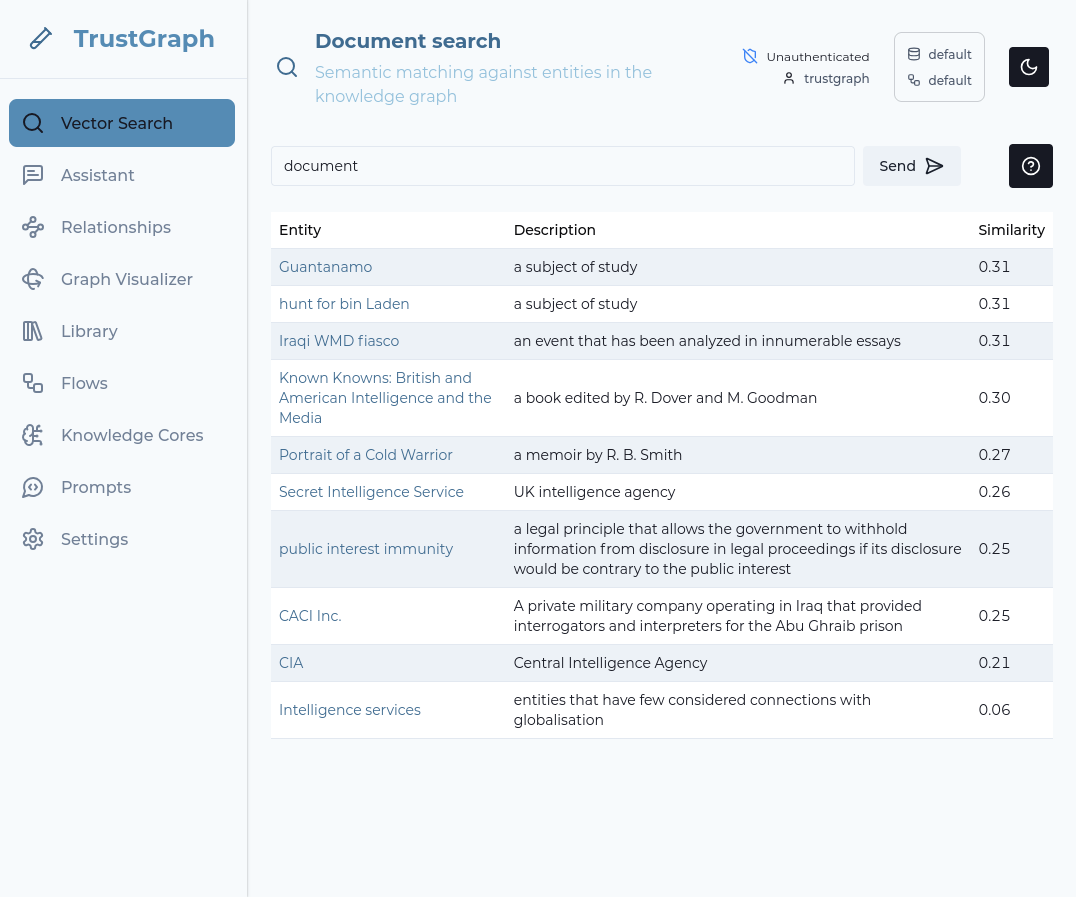

Use Vector search

Select the Vector Search tab. Enter a string (e.g., “document”) in the search bar and hit RETURN. The search term doesn’t matter a great deal. If information has started to load, you should see some search results.

The vector search attempts to find up to 10 terms which are the closest matches for your search term. It does this even if the search terms are not a strong match, so this is a simple way to observe whether data has loaded.

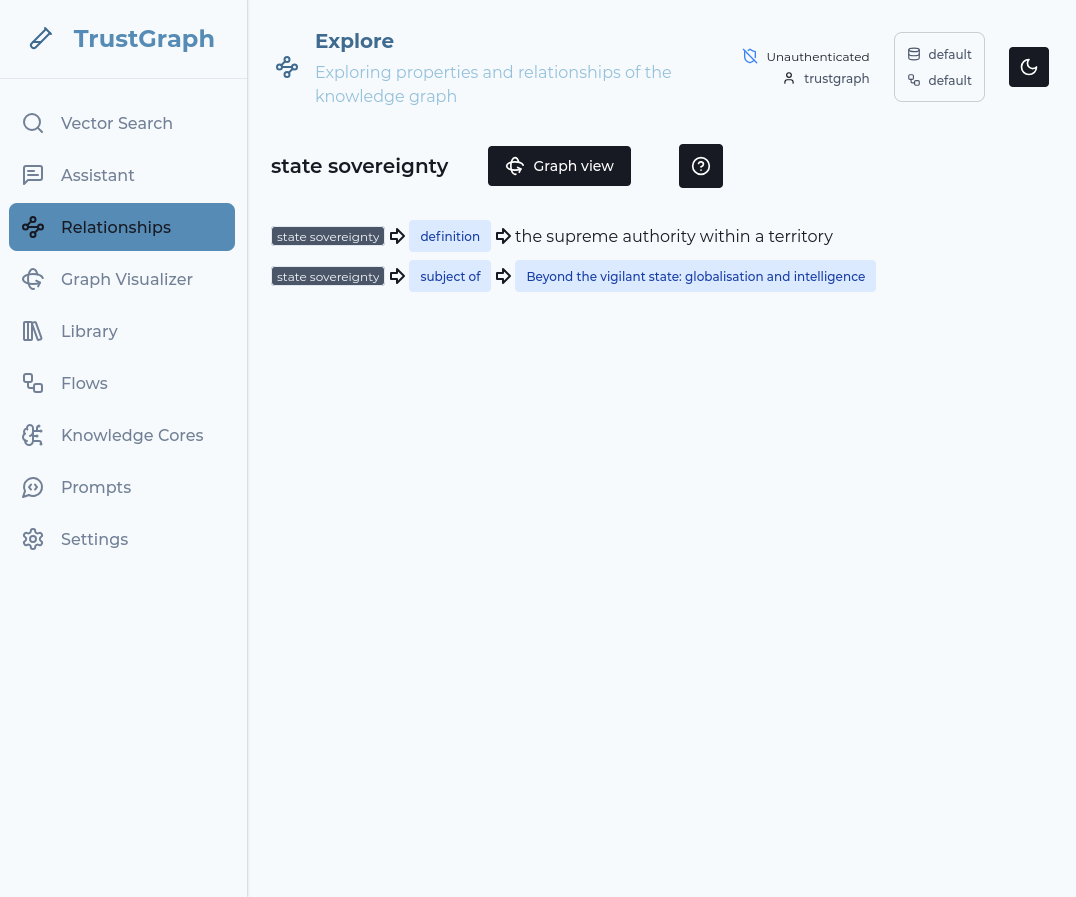

Look at knowledge graph

Click on one of the Vector Search result terms on the left-hand side. This shows relationships in the graph from the knowledge graph linking to that term.

You can then click on the Graph view button to go to a 3D view of the discovered relationships.

Query with Graph RAG

- Navigate to Assistant tab

- Change the Assistant mode to GraphRAG

- Enter your question (e.g., “What is this document about?”)

- You will see the answer to your question after a short period

Troubleshooting

Deployment Issues

Pulumi deployment fails

Diagnosis:

Check the Pulumi error output for specific failure messages. Common issues include:

# View detailed error information

pulumi stack --show-urns

pulumi logs

Resolution:

- Authentication errors: Verify

az loginwas successful and you have an active subscription - Provider not registered: Ensure all required Azure resource providers are registered (see “Register Required Resource Providers” section)

- Quota limits: Check your Azure subscription hasn’t hit resource quotas (AKS clusters, VMs, cores)

- Permission issues: Ensure your account has Contributor or Owner role on the subscription

- Region capacity: Try a different Azure region if resources aren’t available

Pods stuck in Pending state

Diagnosis:

kubectl -n trustgraph get pods | grep Pending

kubectl -n trustgraph describe pod <pod-name>

Look for scheduling failures or resource constraints in the describe output.

Resolution:

- Insufficient resources: Increase node count or VM size in your Pulumi configuration

- PersistentVolume issues: Check PV/PVC status with

kubectl -n trustgraph get pv,pvc - Node issues: Check node status with

kubectl get nodes - Azure disk limits: Verify you haven’t exceeded disk attachment limits per VM

Azure AI integration not working

Diagnosis:

Test LLM connectivity:

tg-invoke-llm '' 'What is 2+2'

A timeout or error indicates AI service configuration issues. Check the text-completion pod logs:

kubectl -n trustgraph logs -l app=text-completion

Resolution:

- Verify the correct

resources.yamlfile is being used (.mlsfor AI Foundry,.csfor Cognitive Services) - Check that AI services are properly deployed in Azure Portal

- Verify service principal has appropriate permissions for AI services

- Ensure API keys are correctly stored in Kubernetes secrets

- Review Pulumi outputs:

pulumi stack output - Check Azure AI Foundry or Cognitive Services quotas in Azure Portal

Port-forwarding connection issues

Diagnosis:

Port-forward commands fail or connections time out.

Resolution:

- Verify kubectl is configured:

kubectl config current-context - Check that the target service exists:

kubectl -n trustgraph get svc - Ensure no other process is using the port (e.g., port 8088, 8888, or 3000)

- Try restarting the port-forward with verbose logging:

kubectl port-forward -v=6 ... - Check AKS cluster status:

az aks show --resource-group trustgraph-rg --name trustgraph-aks

Service Failure

Pods in CrashLoopBackOff

Diagnosis:

# Find crashing pods

kubectl -n trustgraph get pods | grep CrashLoopBackOff

# View logs from crashed container

kubectl -n trustgraph logs <pod-name> --previous

Resolution:

Check the logs to identify why the container is crashing. Common causes:

- Application errors (configuration issues)

- Missing dependencies (ensure all required services are running)

- Incorrect secrets or environment variables

- Resource limits too low

- Azure credentials not properly configured

Service not responding

Diagnosis:

Check service and pod status:

kubectl -n trustgraph get svc

kubectl -n trustgraph get pods

kubectl -n trustgraph logs <pod-name>

Resolution:

- Verify the pod is running and ready

- Check pod logs for errors

- Ensure port-forwarding is active for the service

- Use

tg-verify-system-statusto check overall system health - Check AKS cluster health:

az aks show --resource-group trustgraph-rg --name trustgraph-aks

Azure-Specific Issues

AKS cluster creation fails

Diagnosis:

Check Azure subscription and permissions:

az account show

az role assignment list --assignee YOUR_USER_ID

Resolution:

- Verify you have sufficient quota for AKS in your region

- Request quota increases via Azure Portal if needed

- Ensure your account has

Microsoft.ContainerService/managedClusters/writepermission - Try a different Azure region if capacity is unavailable

- Check service health: Azure Status

Azure AI Foundry quota exceeded

Diagnosis:

Error messages about Azure AI quota or rate limits.

Resolution:

- Check AI service quotas in Azure Portal under “Quotas”

- Request quota increases if needed

- Switch to Cognitive Services (OpenAI) if AI Foundry quota is unavailable

- Implement rate limiting in your application

- Consider upgrading to a higher pricing tier

Key Vault access denied

Diagnosis:

Errors related to Azure Key Vault access when deploying or running services.

Resolution:

- Verify the service principal has appropriate Key Vault permissions

- Check Key Vault access policies in Azure Portal

- Ensure Key Vault firewall settings allow AKS access

- Review service principal role assignments:

az role assignment list --assignee SERVICE_PRINCIPAL_ID

Shutting down

Clean shutdown

When you’re finished with your TrustGraph deployment, clean up all resources:

pulumi destroy

Pulumi will show you all the resources that will be deleted and ask for confirmation. Type yes to proceed.

The destruction process typically takes 10-15 minutes and removes:

- All TrustGraph Kubernetes resources

- The AKS cluster

- Node pools

- Azure AI services (AI Foundry or Cognitive Services)

- Service principal

- Key Vault

- Storage account

- Resource group (if created by Pulumi)

Cost Warning: Azure charges for running AKS clusters, VMs, AI services, and storage. Make sure to destroy your deployment when you’re not using it to avoid unnecessary costs. AKS charges include cluster management fees plus compute and storage costs.

Verify cleanup

After pulumi destroy completes, verify all resources are removed:

# Check Pulumi stack status

pulumi stack

# Verify no resources remain

pulumi stack --show-urns

# Check Azure for remaining resources

az aks list --output table

az group show --name trustgraph-rg

Delete the Pulumi stack

If you’re completely done with this deployment, you can remove the Pulumi stack:

pulumi stack rm dev

This removes the stack’s state but doesn’t affect any cloud resources (use pulumi destroy first).

Cost Optimization

Monitor Costs

Keep track of your Azure spending:

- Navigate to Cost Management + Billing in Azure Portal

- View cost analysis and breakdown by resource

- Set up budget alerts

Cost-Saving Tips

- Spot VMs: Use Azure Spot VMs for non-production workloads (up to 90% cheaper)

- Reserved Instances: Purchase 1 or 3-year reserved instances for production (up to 72% savings)

- Autoscaling: Configure cluster autoscaler to scale down during idle periods

- Dev/Test pricing: Use Azure Dev/Test subscription for development environments

- Shut down non-production: Stop dev/test clusters when not in use

- Right-size VMs: Choose appropriate VM sizes based on actual usage

Example cost estimates (East US):

- AKS management: Free (only pay for VMs)

- 2 x Standard_D2s_v3 nodes: ~$140/month

- Azure AI Foundry: Pay per use (varies by model and requests)

- Cognitive Services: Pay per use (varies by model and requests)

- Storage & Key Vault: ~$10-20/month

- Total estimated: ~$150-200/month for basic deployment (plus AI usage)

Switching Between AI Models

You can switch between Azure AI Foundry and Cognitive Services:

- Copy the desired configuration:

# For AI Foundry (Phi-4) cp resources.yaml.mls resources.yaml # For Cognitive Services (OpenAI) cp resources.yaml.cs resources.yaml - Update Pulumi configuration:

# Update the stack config based on your choice pulumi config set aiEndpointModel "azureml://registries/azureml/models/Phi-4" # OR pulumi config set openaiModel gpt-4o-mini - Re-deploy:

pulumi up

Next Steps

Now that you have TrustGraph running on Azure:

- Guides: See Guides for things you can do with your running TrustGraph

- Scale the cluster: Configure AKS autoscaling or increase node pool size

- Production hardening: Set up Azure Front Door, Application Gateway, and private AKS cluster

- Integrate Azure services: Connect to Azure Storage, Azure SQL, or Cosmos DB

- CI/CD: Set up Azure DevOps or GitHub Actions for automated deployments

- Monitoring: Integrate with Azure Monitor and Application Insights

- Multi-region: Deploy across multiple Azure regions for high availability

- Azure AD integration: Configure authentication with Azure Active Directory

- Advanced AI: Explore Azure OpenAI fine-tuning or custom models

Additional Resources

- TrustGraph Azure Pulumi Repository - Full source code and configuration

- AKS Best Practices - Microsoft’s recommendations

- Azure AI Foundry Documentation - Learn more about Azure’s AI platform

- Azure Free Account - Information about free credits and services